End-to-end Product Design

A self-service web platform that empowers domain experts to update, validate, and manage AI models without coding expertise

View Solution

Machine Learning Model Management Portal is an internal web application developed to empower domain and subject-matter experts to manage, update, and maintain their machine learning (ML) models — without requiring deep technical or coding expertise.

Previously, model maintenance relied heavily on the central data science team, creating delays and bottlenecks in model updates. ML Center bridges that gap by providing an intuitive interface that enables users to:

- Upload new data for training and testing

- Validate and compare ML models

- Manage datasets across multiple data types

(e.g., image, tabular, point cloud, seismic)

- Collaborate with global teams for shared insights

The platform supports Fugro’s mission of decentralizing ML capabilities, allowing business line experts to fine-tune models relevant to their domain — improving both efficiency and model quality across global operations.

Role: UX Designer

My Contribution: User Researcher, UX Designer, UI Designer, User testing

Team size: 7

Duration: 4 months

Tools used: Figma, Miro, Balsamiq

Fugro’s diverse business lines (Hydrography, Geophysics, Offshore Wind, etc.) relied on centralized machine learning models that often failed to perform consistently across unique regional datasets.

Non-technical users had limited control over model training and validation, leading to:

- Dependence on technical teams for even small updates

- Long turnaround times for model retraining

- Data silos and poor collaboration between regional teams

- Lack of transparency into model performance and update history

This created friction in the ML lifecycle and limited the scalability of AI-driven processes across Fugro’s global projects

The ML Center aims to provide a user-friendly, self-service platform that lets non-technical users manage ML models, supports all Fugro data formats, enables collaborative version-controlled workflows, speeds up model iterations, and connects regional models to centralized datasets to strengthen Fugro’s global ML strategy.

The ML Management Portal brings clarity and control to complex ML workflows, making model updates and validations more transparent. It supports collaboration across global teams while providing insights into model performance and data lineage. Explore the interactive prototype video to see how users navigate and manage their ML tasks seamlessly.

Stakeholders reported significantly increased confidence in ML outcomes, as they could now visualize performance changes, understand data dependencies, and make informed decisions about deploying new models

Expecting a 60% reduction in turnaround time and improved model accuracy through local fine-tuning and automated validation

Subject-matter experts can independently retrain regional models saving time for the ML Engineers

Clear tracking of model versions, datasets, and contributors

Shared model repositories connect departments and regions

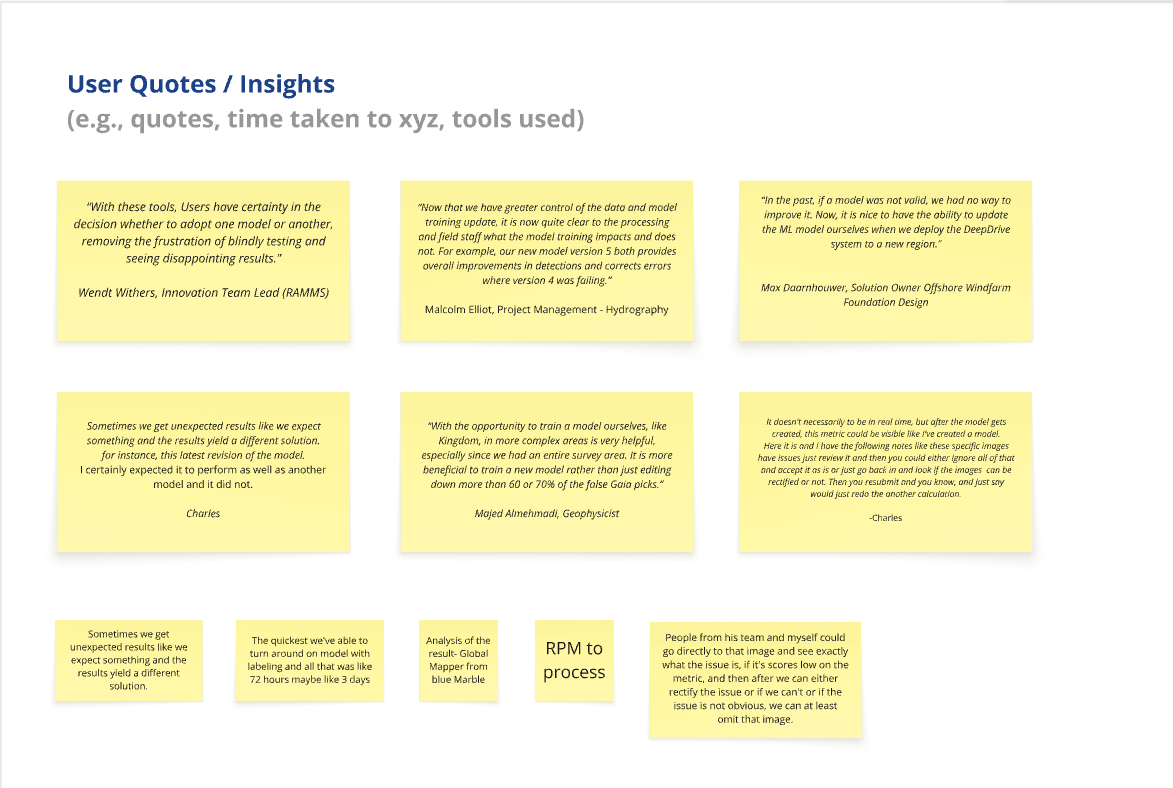

The UX research began with shadow walking sessions to observe how data and ML models were currently managed across projects. Key insights emerged from observing workflows in RAMMS, DeepDrive, and Kingdom applications.

Interviews were conducted with stakeholders across multiple roles:

• Project Managers (Hydrography)

• Geophysicists (Seismic, RAMMS)

• Innovation Team Leads

• Solution Owners (Offshore Wind)

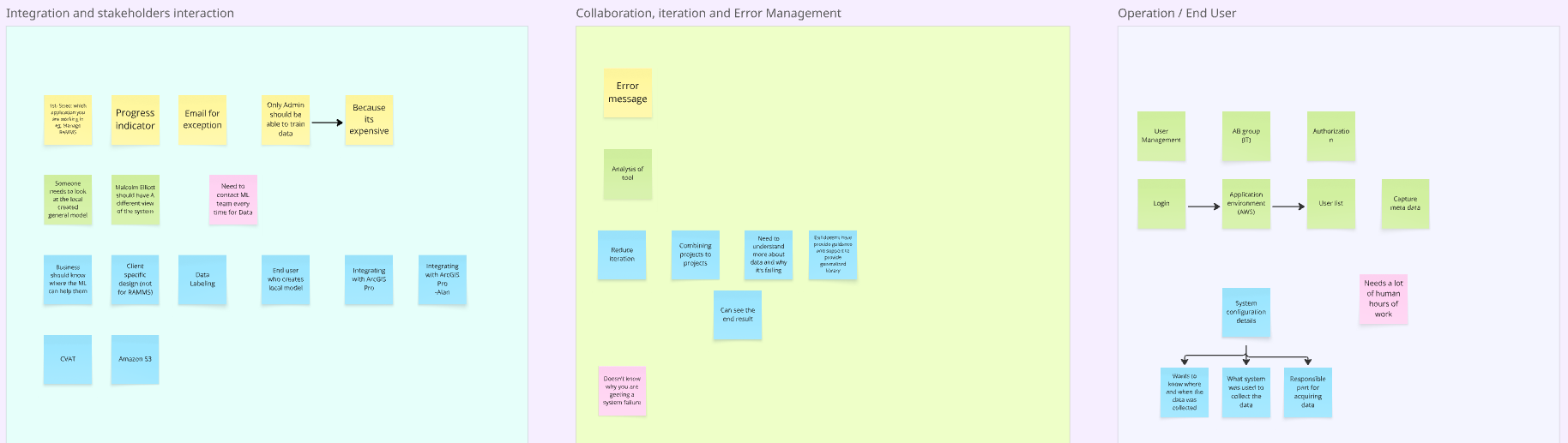

Key Insights from Interviews:

• “We often don’t know why the system fails or which data causes errors.”

• “Model retraining requests go through multiple technical teams — it’s slow.”

• “We need visibility on who changed what, when, and why.”

• “Sometimes retraining locally gives better results than relying on global models.”

These insights informed the creation of features like automated validation testing, data traceability, and multi-level model management (local, generalized, and global).

%20-%20pain%20points%20and%20goals.jpg)

%20-%20General%20Notes.jpg)

The following user flow is designed from the perspective of the ML Engineer, illustrating how they manage and train a model. While the image below may be hard to read in detail, it serves as a visual representation of how I analyzed the observation notes to create the current workflow. Reach out for more details.

%20-%20Current%20flow.jpg)

In the Define phase, I analyzed user insights to identify key pain points and goals. Using an Affinity Diagram, I organized patterns from observations, which guided the creation of a Persona representing the ML Engineer and Stakeholder.

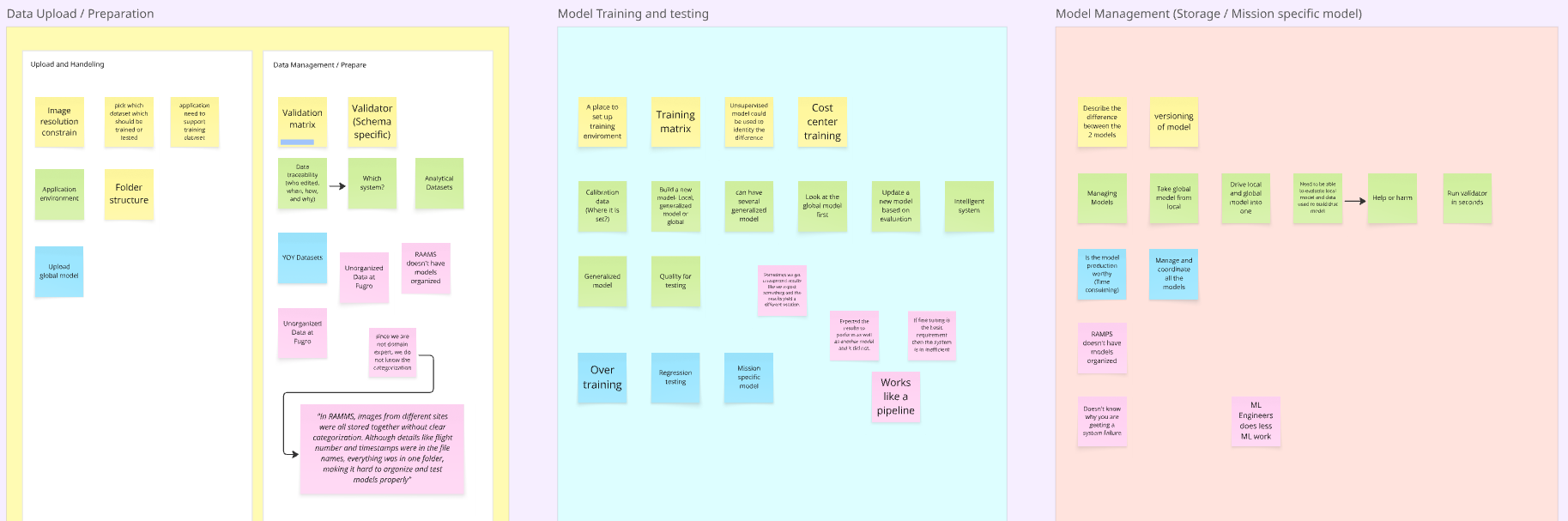

I used an Affinity Diagram to organize insights from user interviews and observations into six key themes — Data Upload & Preparation, Model Training & Testing, Model Management, Integration & Collaboration, Error Management & Iteration, and End User Operations. These clusters highlighted user challenges across the ML workflow and guided the creation of personas and core product features.

Based on insights from interviews and shadowing sessions, I developed personas representing key user roles — such as ML Engineers, Project Managers, and Geophysicists. These personas captured their goals, frustrations, and workflows, helping maintain a clear user-centered focus throughout the design process. They also guided feature prioritization and ensured that each design decision aligned with real user needs.

%20-%20Team%203%27s%20Persona.jpg)

%20-%20Team%201%27s%20Persona.jpg)

During the Explore phase, I transitioned from research to design exploration. Using insights from personas and affinity mapping, I created early concepts and low-fidelity wireframes to visualize user flows and key interactions. This phase focused on validating layout ideas, task sequences, and usability through quick iterations and feedback sessions with stakeholders.

I developed an Information Architecture (IA) in collaboration with the Product Owner to define the structure and hierarchy of the ML Center platform. The IA organized complex workflows — including data management, model training, and validation — into a clear and intuitive navigation system. This blueprint ensured logical task grouping, minimized cognitive load, and supported seamless transitions across the platform’s modules.

%20-%20IA%20(1).jpg)

The ML team lead initially developed a preliminary wireframe before the discovery phase, which served as a starting point for the project. I refined this concept into low-fidelity wireframes, incorporating insights from user research and the IA to improve workflow clarity, feature placement, and task efficiency. I also drew inspiration from the original wireframe while iterating with stakeholders and Subject-Matter Experts, validating core interactions and ensuring the design met real user needs before progressing to mid-fidelity prototypes.

%20-%20Low-fi%20wireframe.jpg)

Building on the low-fidelity wireframes, I developed mid-fidelity wireframes to add more detail and structure to the user interface while maintaining focus on usability and workflow. These wireframes incorporated visual hierarchy, interactions, and data presentation elements, reflecting insights from user research and feedback from subject-matter experts. I also drew inspiration from the ML team lead’s initial wireframe, refining layouts and navigation to better support model management, validation, and collaboration tasks. This stage allowed for further iteration and alignment before moving into high-fidelity designs.

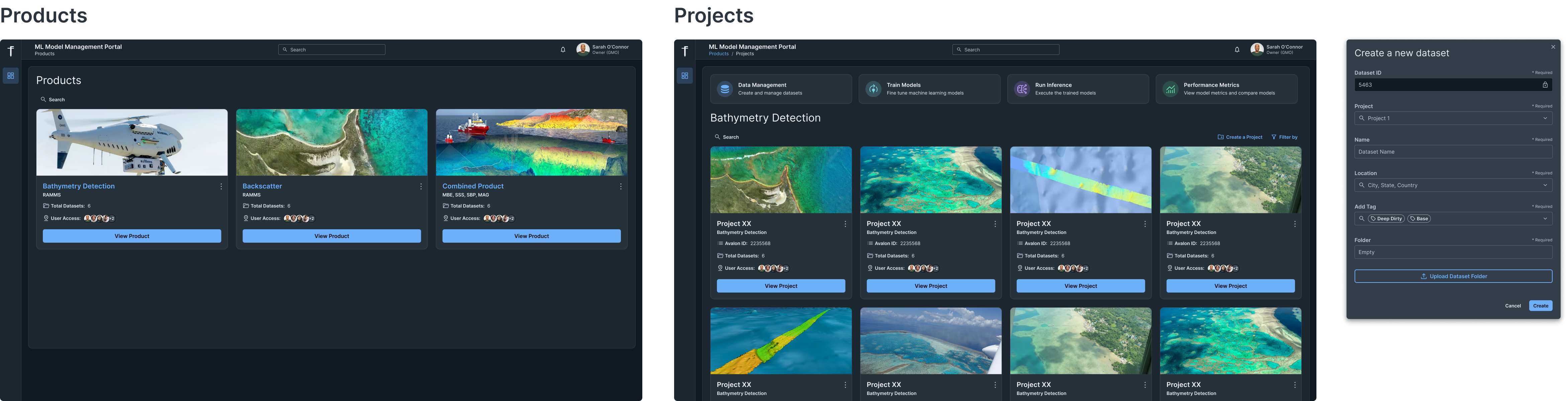

In the Delivery Phase, I focused on validating and finalizing the design for development. User testing sessions with subject-matter experts helped identify usability issues, refine interactions, and ensure the platform met real user needs. Based on feedback, I created high-fidelity designs with polished visuals, clear hierarchy, and interactive elements to accurately represent the final product. Finally, I prepared a detailed handoff for the development team, including specifications, assets, and interaction guidelines, ensuring a smooth transition from design to implementation.

Date: 10/10/2024

Tester: Akash

Participant: Charles

Session Duration: 1 hour

1. Test Objectives

Evaluate Usability:

- Assess how easily users can navigate the interface.

- Identify any usability issues or pain points.

Understand User Behavior:

- Observe how users interact with the product.

- Determine if users follow the expected paths or workflows.

Gather User Feedback:

- Collect qualitative feedback on the overall user experience.

- Identify areas for improvement based on user suggestions.

Validate Design Decisions:

- Confirm that design choices meet user needs and expectations.

- Ensure that the design aligns with user mental models.

2. Participant Background

Role: Delivery Excellence Engineer

Experience Level: Expert

Familiarity with Product: First-time user

3. Task Scenarios

Task 1: Create Dataset / Upload Dataset

Success Rate: Success

Time Taken: 3 mins

Observations: At the start it took some time to find "Create Dataset button" but eventually found it.

Issues Encountered: Got a bit confused at the start

Task 2: Send Datasets for labeling

Success Rate: Success

Time Taken: 2 mins

Observations: Found it easy

Issues Encountered: N/A

Task 3: Train a model

Success Rate: Success

Time Taken: 5

Observations: Missing Warning pop-up

Issues Encountered: N/A

In the high-fidelity stage, I translated mid-fidelity wireframes into polished, visually detailed designs for the ML Center platform, using the Fugro's design system while creating some custom components to meet unique workflow needs. The designs incorporated refined typography, color schemes, data visualizations, and interactive elements to ensure clarity and usability for complex ML processes. These screens were iterated multiple times based on feedback from target users and stakeholders, ensuring the final designs were both user-centered and development-ready.

This project reinforced the critical role of thorough user research in shaping effective design solutions. Through interviews, shadowing, and workflow observations, I gained a deep understanding of the challenges faced by ML engineers and project managers, which directly informed personas, affinity diagrams, and the information architecture. I learned how to translate these insights into actionable design decisions, balancing the company’s design system with custom components to meet unique needs. Iterating wireframes and high-fidelity designs based on feedback from subject-matter experts highlighted the importance of continuous validation and collaboration. Overall, the project strengthened my skills in user-centered design, research-driven problem solving, and designing complex workflows that empower non-technical users.

Get to Know the Designer Behind the Pixels

UX Designer

Role- UX Researcher

Mobile and web based IoT-Powered Solution for Streamlining Inventory Tracking and Optimization

User Research

Role- UX Researcher

Exploring the Future of Receipts Management: A Study on Technological Tools and User Behaviors.

Product Design

Role- Product Designer

Improving Sales Team Efficiency through Intuitive CRM System

UI/UX Design

Role- Information Architect • UI Designer

Website redesign using different Information architecture testing methods